Introduction

Cloud native architecture was designed to solve the scaling problem.How to handle 10x more users without everything falling apart. Microservices, containers and APIs that survive when components fail. But AI Native architecture solves the adaptation problem how to build systems that learn, remember and intelligently respond to complex multi step workflows.

Picture this: You walk into a doctor’s office but the doctor has no memory. Every time you speak it’s like meeting him for the first time. He can’t remember your symptoms from 5 minutes ago or your medical history or even your name.(I hear the movie name you’re thinking about) They are brilliant at diagnosing individual symptoms but they can’t connect the dots or build on previous conversations. This is exactly what happens when you try to “bolt an LLM into” traditional cloud native systems.

The Memory Problem

Here is the fundamental issue AI systems need [Memory] to work properly. Unlike a simple web request that just needs to return data AI applications often involve multi step processes where each step builds on the previous one.

-

A user asks about their account balance

-

Then asks “Can I transfer ₹500 from that account?”

-

Then asks “When will that transfer complete?”

In a stateless system each question is treated independently. The AI has no idea what “that account” refers to in step 2 or what “that transfer” means in step 3. The system forgets everything between interaction.Memory is just the tip of the iceberg. AI applications demands entirely new architectural requirements.

AI native architecture is where the entire system is designed around the unique requirements of intelligent applications. Instead of retrofitting AI onto cloud native patterns you build from the ground up with these principles at core.

-

Stateful by Default: Every interaction can build on previous context.

-

Evaluation Driven: Quality gates and continuous monitoring are architectural components not add ons.

-

Content Aware: Data preparation and retrieval quality are first class concerns.

-

Adaptive and Self Managing: The system learns from usage patterns and optimizes itself.

Let’s think of it this way Cloud native is like building a highway system optimized for moving lots of traffic efficiently. AI Native is like building a city designed for complex interactions, sophisticated memory and evolves over time.

In the next section I will break down exactly what this looks like in practice walking through the essential components every AI Native system needs to handle memory, evaluation, retrieval and adaptation reliably.

AI Native System

The AI native request lifecycle is fundamentally different.Instead of the traditional single request response model AI Native systems require orchestrated workflows with explicit state management, quality gates and recovery mechanisms.

The Three-Layer Foundation: Cognitive Architecture Model

Perception, Reasoning & Action

Every AI system implements a three layer cognitive architecture that mirrors human decision-making.

-

Perception Layer - Transform raw input into structured actionable state.

This layer acts as the system’s sensory interface gathering and interpreting data from the environment or user input. It correlates new observations with session history, security context and previous interactions to form a coherent enriched state. It also applies safety filters, PII detection and compliance checks to ensure handling is responsible and secure

State created: Session context, user profile, security tokens, environmental metadata etc.

-

Reasoning Layer - Analyze context and develop execution strategies.

Serving as the AI’s cognitive center this layer plans and decides by decomposing tasks into subtasks selecting approaches based on confidence and constraints and balancing cost, latency and output quality trade offs. It tracks internal states, manages memory and logically reasons through problems like human deliberation.

State created: Task plans, confidence scores, resource budgets, reasoning traces etc..

-

Action Layer Orchestrate & Execute plans through coordinated tool use and quality gates.

This layer executes the plans through coordinated API calls, tool interactions, process triggers or direct environment manipulation. It enforces evaluation checkpoints and quality gates before finalizing outputs. It also manages checkpoint data to enable recovery and explainability.

State created: Tool call logs, execution results, quality metrics, checkpoint data etc..

Now let’s dive into common design patterns to design AI Native Systems.

Proven Design Patterns for AI Native Systems

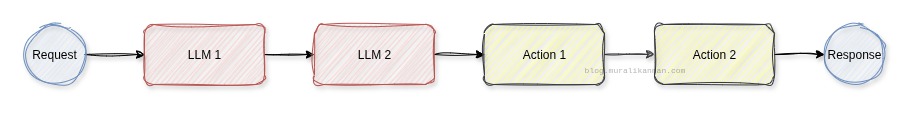

1. Controlled Flows

LLMs actively perform tasks within each workflow step but the sequence of steps and transition rules are fixed by design.The LLM has freedom to operate within each step but cannot choose which step comes next.

When to use: Tasks that decompose into well defined subtasks where you need reliable, deterministic outcomes despite using LLMs.

Example Applications : Content Review Workflows, Financial Document Processing etc..

Implementation Details

- Built in error handling and retry mechanisms at each step with fixed transition logic preventing workflow deviation.

- Each step can use different LLMs or specialized prompts for optimization.

Benefits : Predictable execution, easy debugging, maintains system reliability while leveraging LLM capabilities.

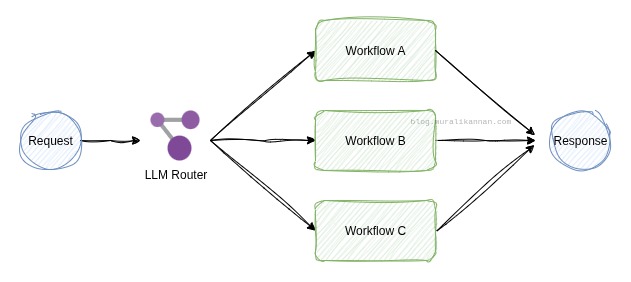

2. LLM as a Router

LLM categorizes incoming requests and routes them to specialized workflows.Smart routing based on content analysis rather than simple keyword matching.Smart routing based on semantic understanding rather than simple keyword matching

When to use : Incoming requests span diverse topics and complexity levels that require different processing approaches.

Example Applications Enterprise Customer Service, IT Operations, Security Operations Centers.

Implementation details:

- Router uses classification prompts with clear categories

- Each downstream workflow can use different models/prompts

- Fallback routing for unclassifiable requests

Benefits: Accurate responses despite broad input scope & cost optimization through appropriate model selection.

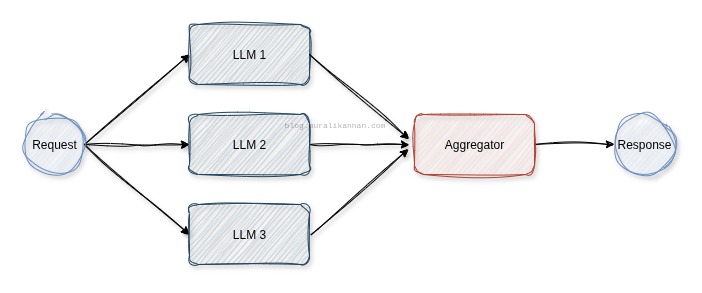

3. Parallelization

Multiple LLMs work simultaneously on the same task or subtasks with results aggregated by another LLM or custom logic.Leveraging parallel processing for independent subtasks or improved reliability & speed through consensus.

When to use: Tasks that naturally split into independent subtasks or when multiple attempts significantly improve output quality.

Example Applications Code Generation, Financial Analysis, Forensic Investigation etc..

Implementation details:

- Each LLM can focus on specific aspects with specialized prompts.

- Custom aggregation logic combines results or selects optimal outputs based on confidence scores.

Benefits: Reduced latency through parallel execution, improved output quality through multiple perspectives & better reliability through consensus mechanisms..

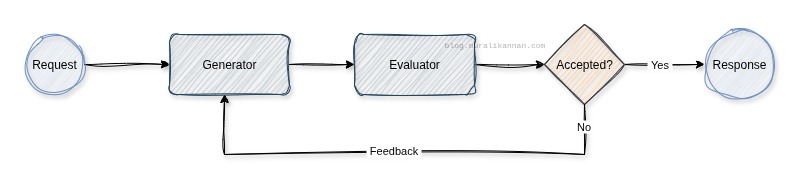

4.Reflect and Critique

One LLM generates responses while another provides critique and feedback in an iterative refinement loop.Iterative improvement through AI powered evaluation until acceptable quality thresholds are met.

When to use: Tasks where iterative refinement based on clear evaluation criteria yields demonstrably better results.

Example Applications Content Creation, Software Development, Research Analysis etc.. Implementation details:

- Maximum iteration limit (typically 3-5 cycles) prevents infinite loops.

- Clear evaluation criteria enable effective LLM reasoning.

- Includes fallback logic when consensus isn’t achieved.

Benefits: Higher quality outputs through systematic improvement, reduced hallucinations via evaluation loops & transparent refinement process.

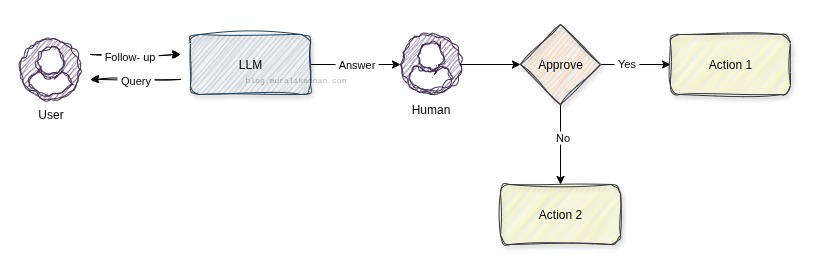

5.Human in the Loop

Strategic incorporation of human input into automated LLM pipelines at critical decision points.Humans review, validate, edit or override LLM outputs where complete automation is unfeasible due to stakes or complexity.

When to use: High stakes decisions, regulatory requirements or tasks requiring human judgment and accountability.

Example Applications Healthcare Diagnostics, Financial Services, Legal Compliance, Threat Hunting etc..

Implementation details:

- Multi turn conversations for clarifications.

- Human override capabilities at critical checkpoints.

- Comprehensive audit trails for all human decisions.

Benefits: Significantly improved reliability for sensitive tasks, maintained human accountability, clarification of ambiguous requirements & regulatory compliance.

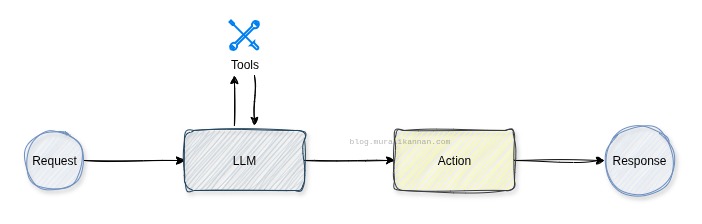

6.Single Agent Architecture

LLMs determine the sequence of steps required to complete tasks using dynamic tool selection and reasoning through previous actions.LLMs make execution flow decisions based on tool results and environmental feedback & adapting strategies in real time.

When to use: Tasks without structured workflows, acceptable latency tolerance and where non deterministic outputs provide value.

Example Applications Enterprise IT Support, Investment platforms, Autonomous customer service agents etc..

Implementation details:

- Tool selection based on task analysis.

- Reasoning loops inform sequential actions.

- Can combine with other patterns (human oversight / reflection) for production reliability.

Benefits: Extreme flexibility for unstructured tasks, handles complex multi step problems & leverages comprehensive tool ecosystems.

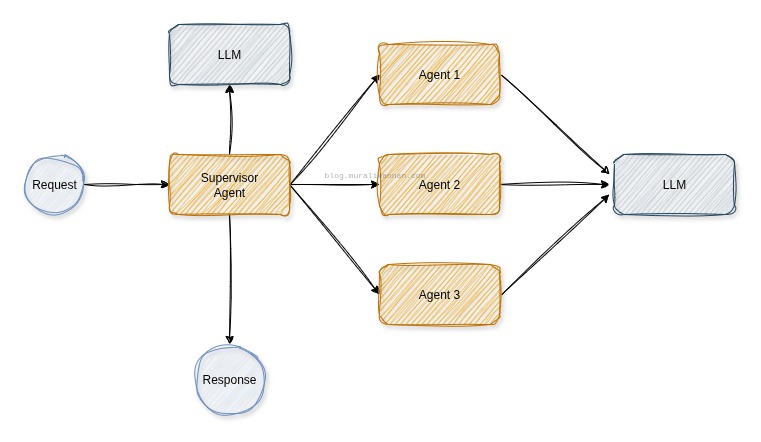

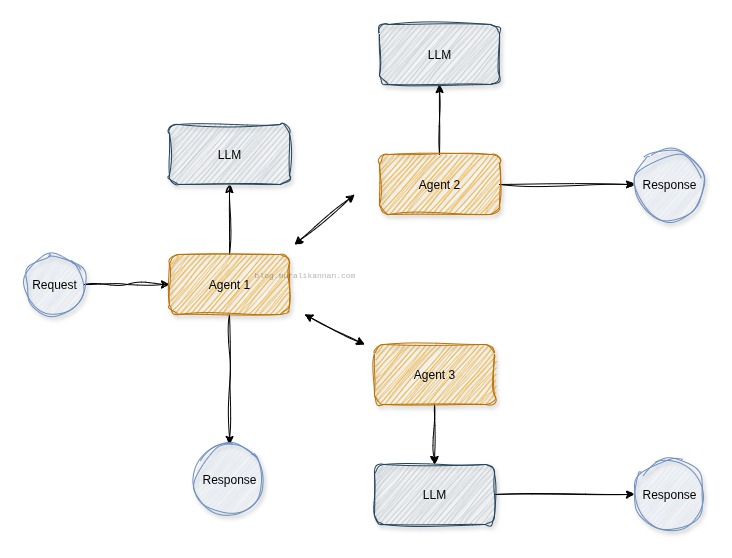

7.Multi Agent Architecture

Multiple specialized agents collaborate to accomplish complex tasks through defined coordination architectures.

Coordination architectures:

- Supervisor: Single agent orchestrates group decisions

- Sequential: Agents hand off work in predetermined order

- Network: Each agent communicates with all others

- Custom: Specialized interaction patterns for specific domains

When to use: Open ended experimental tasks where unpredictable outcomes are acceptable and specialization provides clear benefits.

SUPERVISOR

NETWORK

Example Applications Scientific Research, Content Production, Enterprise Planning,Red Team Simulation.

Caveats:

- Higher costs, latency and debugging complexity.

- Requires analyzing 10-50+ LLM calls across multiple agents.

- Use with extreme caution for production applications.

Benefits: Pushes LLM autonomy limits enables novel task exploration & leverages specialization for complex domains.

Implementation Patterns for AI-Native Systems

Moving from architecture to code the following implementation patterns tackle the core challenges of stateful orchestration, dynamic routing and continuous quality assurance in production grade AI systems. Below each pattern you’ll find a mix of open source(OSS) and managed tools so you can choose what fits your stack best.

State Management and Persistence

Checkpoint State Pattern

AI workflows can fail at any step losing context and forcing expensive restarts. The Checkpoint State Pattern snapshots workflow state including prompts, retrieved context and reasoning before each major action and validates integrity on restore. This enables resuming from the last saved point rather than starting over.

- Tools/Libraries

- LangGraph (OSS) A graph-based AI workflow orchestration with built in checkpointing.

- Temporal (OSS) A Durable workflow engine with reliable state persistence.

- MCP (ML Control Plane) Manages ML workflow state and checkpoints.

- Redis Fast in-memory store for transient state with persistence.

Memory Hierarchy Pattern

AI systems require different memory types working (per request), session (per user) and long term (knowledge). The Memory Hierarchy Pattern implements tiered stores with promotion logic and archiving to durable storage.

- Tools/Libraries

- Weaviate (OSS) for semantic vector native memory.

- Redis (OSS) for working memory with low latency access

- PostgreSQL or MongoDB (OSS) for session persistence

- Milvus (OSS) for long term vector knowledge stores

Orchestration and Flow Control

State Machine with Recovery Pattern

Complex AI workflows need predictable state transitions and rollback capabilities. The State Machine with Recovery Pattern models workflows as explicit finite state machines with compensation actions to undo or retry failed steps.

- Tools/Libraries

- LangGraph (OSS) for graph based stateful orchestration

- Apache Airflow (OSS) for DAG based pipelines with retry logic.

- Temporal (OSS) for durable state machines with built in compensation.

Adaptive Router Pattern

Different requests demand different processing strategies but optimal routing can’t be hard-coded. The Adaptive Router Pattern uses an LLM or lightweight classifier to route requests dynamically with performance based fallbacks.

- Tools/Libraries

- LangChain Router (OSS) for LLM driven routing.

- A2A Protocol (Agent to Agent OSS by Google) for inter agent routing logic.

- Istio / Consul (OSS) for service mesh based health checks and weighted routing.

Integration and Composition

Tool Composition Pattern

AI agents often invoke multiple external tools. The Tool Composition Pattern standardizes interfaces, enforces schema validation and implements retry/backoff logic to ensure reliable & orderly tool execution.

- Tools/Libraries

- LangChain Tools (OSS) with rich adapters for web search databases and APIs.

- AutoGen (OSS) by DeepLearning.AI for multi agent tool orchestration.

- Model Context Protocol (MCP) (OSS) an open standard and protocol for secure and dynamic AI tool integration.

Quality Assurance and Evaluation

Circuit Breaker with Evaluation Pattern

AI service quality can degrade gradually evading traditional availability checks. The Circuit Breaker with Evaluation Pattern extends circuit breakers to monitor domain specific metrics (e.g., grounding accuracy, hallucination rate) and tripping the circuit when quality falls below thresholds.

- Tools/Libraries

- Arize AI (OSS) for real time model monitoring and drift detectio.

- Prometheus (OSS) with custom exporters for AI quality metrics.

- LangSmith (OSS) for LLM observability and circuit management.

- OpenTelemetry (OSS) for tracing combined with quality metrics.

A/B Testing with Quality Gates Pattern

Validating AI changes requires controlled experiments and rollback capabilities. The A/B Testing with Quality Gates Pattern randomly splits traffic measures key metrics and automatically halts deployments that fail quality gates.

- Tools/Libraries

- MLflow (OSS) for experiment tracking and gated rollouts.

- Weights & Biases (OSS) for variant comparisons and alerting.

- Evidently AI (OSS) for data and model drift detection.

- Flagr (OSS) for dynamic feature flags tied to experiment status.

Conclusion

Adopting AI Native systems means designing solutions that are reliable, maintainable and adaptable. The implementation patterns we’ve covered from Checkpoint State and Memory Hierarchies to Adaptive Routing, Tool Composition and Quality Driven Circuit Breakers form a practical playbook you can apply today.

-

Start simple: Kick off with controlled flows or LLM routing to gain immediate & predictable wins.

-

Add complexity systematically: Introduce each new pattern only when you have clear data showing its benefit.

-

Design for observability: Instrument every stage so you can trace, debug and audit your workflows with confidence.

-

Build evaluation first: Layer in quality gates before you let autonomous behaviors loose in production.

By following these guiding principles you can build AI features that don’t just work in a lab but thrive in the real world continually learning and improving without becoming a maintenance burden. Ready to take the next step ? in my upcoming posts I will explore how to orchestrate these patterns with LangGraph and other similar frameworks transforming your implementation blueprint into working code.

Citations

https://www.growthjockey.com/blogs/agent-architecture-in-ai

https://www.engati.com/blog/ai-agent-architecture

https://www.graphapp.ai/blog/cognitive-architecture-crafting-agi-systems-with-human-like-reasoning

https://arxiv.org/pdf/1702.01596

https://www.mongodb.com/resources/basics/artificial-intelligence/agentic-systems

https://arxiv.org/abs/2303.13173

https://www.infoq.com/articles/practical-design-patterns-modern-ai-systems/

https://orq.ai/blog/rag-evaluation

Image Courtesy : Generated using AI (Perplexity)