WHAT IS A CLOUD NATIVE APP

We know the cloud. It’s there available as public, private, or hybrid cloud. It provides a whole bunch of services that we can consume out of the box.

Cloud Native is a methodology for building modern-day applications by harnessing the power of the cloud to enable our systems to be resilient, manageable & observable.

HOW DO WE DO IT?

Right from the beginning, the application is sculpted for running on the cloud. A cloud native app usually has the following traits.

- Microservice Based

- Containerized

- Continuously Delivered

- Dynamically Orchestrated

MICROSERVICE ARCHITECTURE

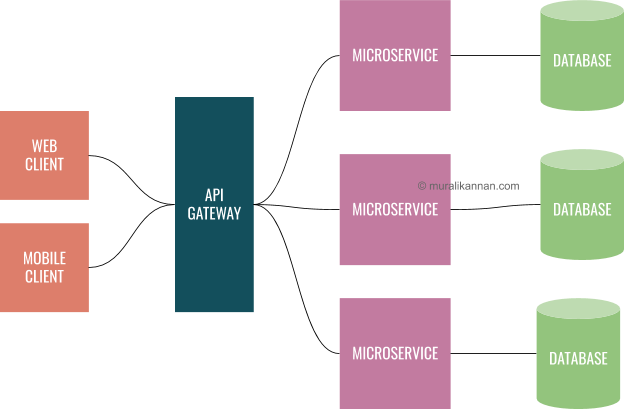

Instead of building the app with a huge monolithic codebase Cloud Native applications are built using microservice architecture which is modular & loosely coupled. The application will be a collection of microservices where each service can be managed & operated independently.

Every app function is broken down into a single piece of microservice and microservices communicates via APIs.

Few Advantages of Microservices are

- Managed Independently

- Container friendly

- Horizontally Scalable on-demand

- Fits into CI/CD pipelines

- Better fault isolation

Because of its independent context, each microservice can be designed in its own way and different programming languages & frameworks can be used to build each microservice within the same application. Microservices can also have its own database.

CONTAINERIZED

Cloud Native apps are containerized. Containers are highly accessible, scalable & easily portable between environments. We can use tech like DOCKER to containerize our application. Now we have our microservices lets pack them in containers.

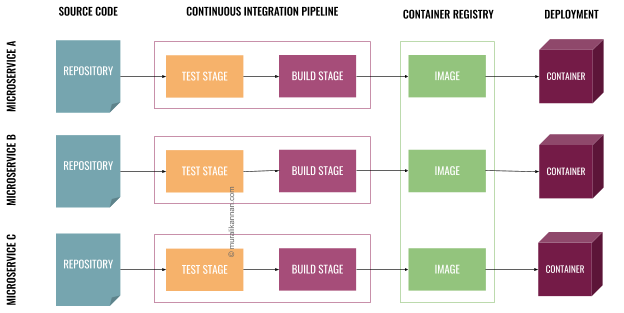

Since we have a dedicated pipeline to build each microservice the images are pushed to a centralized (Public or Private) registry after each successful build & it can be pulled and deployed in our desired environment. By this way, we can maintain all versions of our builds in our registry & we can roll back to a previous version easily if needed.

CONTINUOUSLY DELIVERED

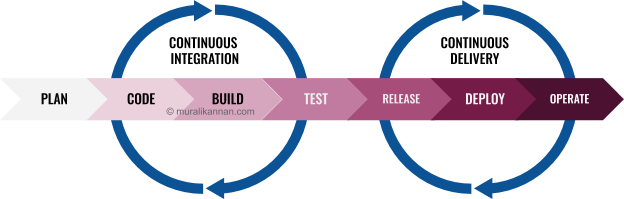

Whenever we make code changes to our application we have to release it to our end-users as soon as possible. This is a tedious task if done manually. Let’s automate it. This is where CI/CD comes in.

As we can see from the above diagram each microservice has its own code repository & a dedicated continuous integration pipeline. Whenever we make code changes to a particular microservice the dedicated CI pipeline is triggered and after running the test & build stages the final image is pushed to the container registry. In this way, each microservice is updated independently without disturbing other microservices in the system.

From the planning stage to the deployment stage we need to follow a streamlined DEV OPS process. The agenda is to deploy our changes in our desired environment as soon as possible without human intervention. When things break the CI / CD cycle is repeated until we get the desired outcome.

DYNAMICALLY ORCHESTRATED

Cloud Native applications are dynamically managed & operated in the cloud. Now we have our microservices and we need to find a way to deploy, scale & manage them automatically.

To achieve this a container orchestration tool is used. We can take Kubernetes (K8S) or Docker Swarm or Apache Mesos for example. The container orchestration tool helps us achieve the following without much hassle.

- Deployments

- Scaling

- Networking

- Management & Insights

If we don’t use a container orchestration tool we have to deploy our containers manually, create our own load balancers, create services & service discovery mesh. Instead of reinventing the wheel, we can just use a container orchestration platform.

Abstract illustration for visualization purpose

Once we have our microservice image updated in our container registry we can expect the container orchestration tool to perform the following operations for each microservice fully automated.

- Pulling Images from container registry & deploying the pods

- Scheduling pods across nodes

- Create replicas as needed

- Enabling access to the pods using services

- Creating service discovery to provide network connectivity to pods

- Managing the pod lifecycle

- Providing storage services to attach persistent volumes to our pods to persist data

(In a K8S context, a pod is a wrapper around a container.A pod can have single or multiple containers based on the configuration).

A container orchestration tool is also capable of creating & managing multiple worker nodes on multiple hosts.

We can achieve all this just by writing the configuration in a YAML file.

CNCF - CLOUD NATIVE COMPUTING FOUNDATION

Cloud Native Computing Foundation was formed in 2015 by the LINUX FOUNDATION for advancing container technology and align the tech industry around its evolution.CNCF cultivates projects with maturity level for building modern-day cloud native applications.

CLOUD NATIVE TRAIL MAP

CNCF has created a Trail map to understand the cloud native approach and to explore the tools that are available to build Cloud Native applications. It does not recommend a specific trail but instead allows us to choose our own path to reach our cloud native destiny.

Since the Trail Map is so huge Iam providing the link to explore it.You can check out the CNCF Trail Map here Cloud Native Trail Map

Currently CNCF is backed by 450+ sponsors.The founding members of CNCF are Google, CoreOS, Mesosphere, Red Hat, Twitter, Huawei, Intel, Cisco, IBM, Docker, Univa, and VMware.

There we go, now we have understood what is a Cloud Native application and how do we create & manage one.

We will technically discuss Orchestration tools in a future post with code samples & examples (Link will be posted here once published).