Containers have become the de facto standard for packaging and deploying applications these days. Since many of us come from a Virtual Machine background for building and deploying applications, we often overlook containers as just a VM replacement and try to apply VM practices to the containers. However, containers are not just a drop-in replacement for VMs since they serve a whole different purpose. These tiny self-contained components promote portability, efficiency, scalability, and security, as per the Open Container Initiative (OCI), which is the international governing body for setting container standards.

In this post, I have consolidated a few anti-patterns that developers and deployment engineers tend to fall into when dealing with containerized deployments. These are the major misconceptions I have observed when dealing with containers. The patterns I’m going to talk about are mainly concerned with production deployments and should not be confused with development or lower environments. Without further ado, let’s jump into it.

TOP 10 CONTAINER ANTI PATTERNS TO AVOID

- Treating Containers as VMs

- Mutating a running container / Immutable Infrastructure

- Monolithic Containers

- Manual Container Management

- Stateful Containers

- Using ‘Latest’ Tags

- Overusing Volume Mounts

- Not Setting Resource Limits

- Ignoring Networking Considerations

- Neglecting Security Updates

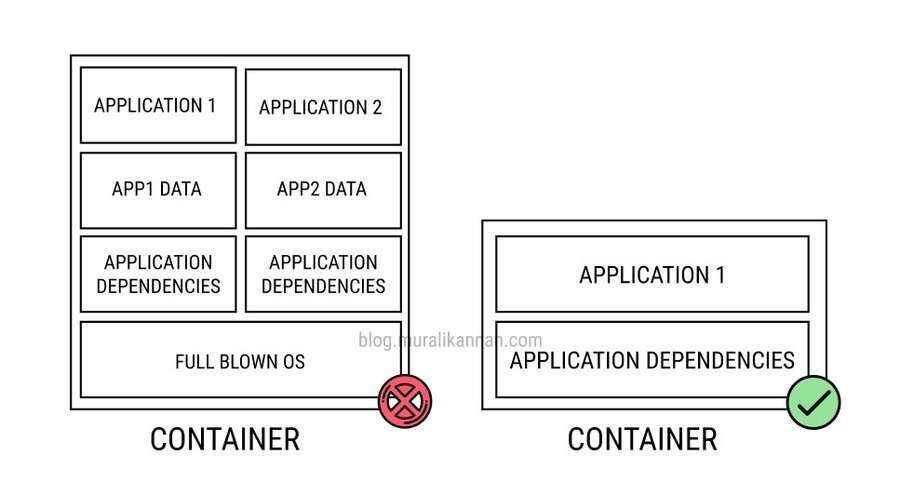

1. TREATING CONTAINERS AS VMs

Containers should not be treated as VMs because this approach negates many of the advantages that containers offer. Containers are designed to be lightweight, portable, and efficient.It is important to note that containers are not the same as virtual machines (VMs). VMs create a complete isolated environment with their own operating system, while containers share the underlying host operating system.Treating them like VMs can add overhead, reduce performance, and introduce security risks.

THINGS TO AVOID

- Avoid assigning full VM-like resources to containers, which negates their efficient utilization.

- Don’t bloat container images with unnecessary operating system components, making them bulky.

- Containers should be portable, Configuring containers to rely on specific host OS versions or configurations hinders portability.

- Containers should be scalable and Slowing down the scaling process by manually managing containers like VMs. Embrace automation and orchestration tools for better container management.

- Don’t forget that containers should be portable across different environments and treating them like VMs can disrupt this.

BEST PRACTICES

- Start with lightweight base images and build container images that only include necessary dependencies and files.

- Follow the single responsibility principle by running a single application or process within each container.

- Use external storage solutions or databases for managing persistent data

- Define resource limits (CPU and memory) for containers to ensure fair resource allocation.

- Treat containers as immutable. When changes are needed, create new container images and deploy them.

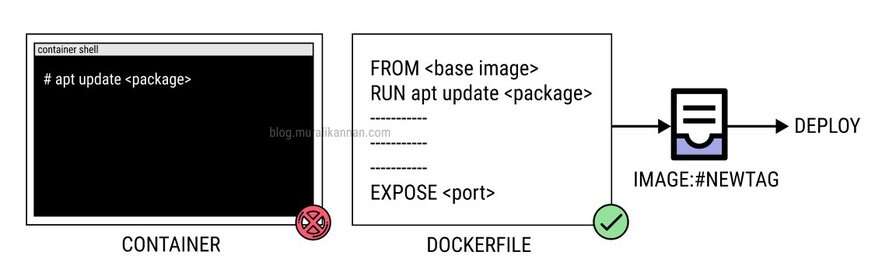

2. MUTATING A RUNNING CONTAINER / IMMUTABLE INFRASTRUCTURE

Immutable infrastructure is a deployment strategy where infrastructure components are treated as immutable, meaning they are not changed once they are deployed.Containers are designed to be immutable and they are not supposed to be changed once they are created. This makes it easier to manage and deploy containers, and it also helps to ensure that your applications are always running in a known and consistent state.Mutating a running container violates the principles of immutable infrastructure by changing the container’s state, which can make it difficult to troubleshoot problems, reproduce the environment, and maintain security.

THINGS TO AVOID

- Refrain from making direct changes or updates to a running container’s state.

- Do not install or update software in a running container.

- Do not attempt to apply configuration changes or updates to a container without recreating it.

- Avoid updating container images without changing the version or tag, as this can lead to inconsistency.

BEST PRACTICES

- Design your applications to be stateless which do not store any data in the container, so they can be easily restarted from scratch if needed.

- Design containers to be immutable from the beginning, considering environment variables and configurations as constants.

- Embrace the “immutable deployment” concept, where each update results in a new, immutable container instance rather than modifying existing ones.

- Integrate CI/CD pipelines to automate the building, testing, and deployment of container images.

- Do not perform routine maintenance, updates, or scaling operations on a running container. Instead, replace it with a new, updated instance.

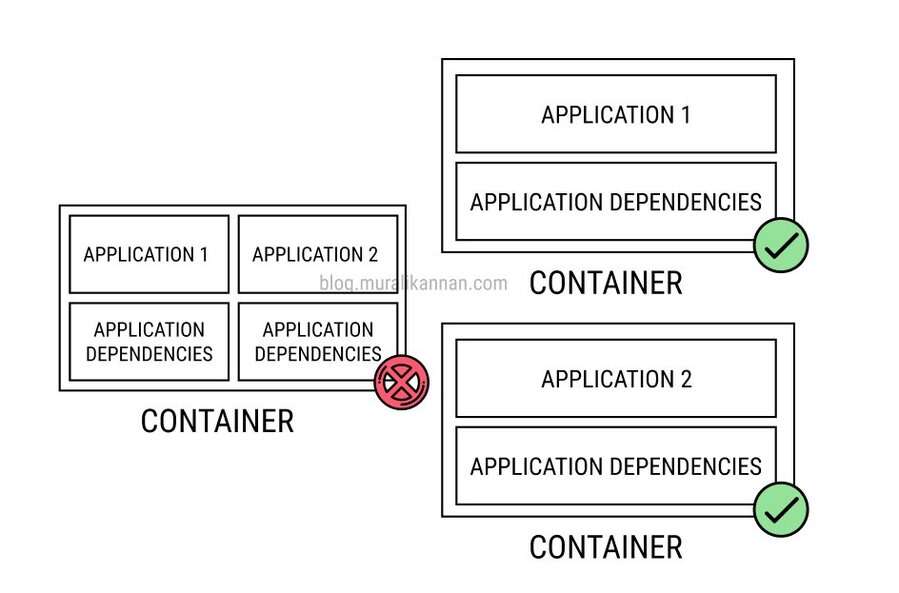

3. MONOLITHIC CONTAINERS

The “Monolithic Containers” anti-pattern involves packaging an entire application stack, including multiple services and dependencies, within a single container. While containers are designed to be lightweight and modular, this approach consolidates everything into one unit, resulting in inefficient resource usage, decreased scalability, and operational complexities making it challenging to scale, maintain, and update applications efficiently. A better approach is to use microservices architecture and run each component of your application in its own container. This will improve performance, scalability, and maintainability.

THINGS TO AVOID

- Avoid packaging all services and dependencies within a single container, which leads to a lack of modularity.

- Do not tightly couple services within a container, as this makes it challenging to independently update or scale individual components.

- Refrain from inefficient resource allocation by assigning excessive CPU and memory to monolithic containers.

- Avoid hindering scalability; monolithic containers limit the ability to scale specific services independently.

BEST PRACTICES

- Follow the principle of one service per container, ensuring each container addresses a specific task.

- Ensure that services within containers are loosely coupled, allowing for independent updates and scaling.

- Optimize resource utilization by allocating the right amount of CPU and memory to each container.

- Implement service discovery mechanisms to allow containers to find and communicate with one another.

- Embrace a microservices architecture, breaking down applications into smaller, independently deployable containers.

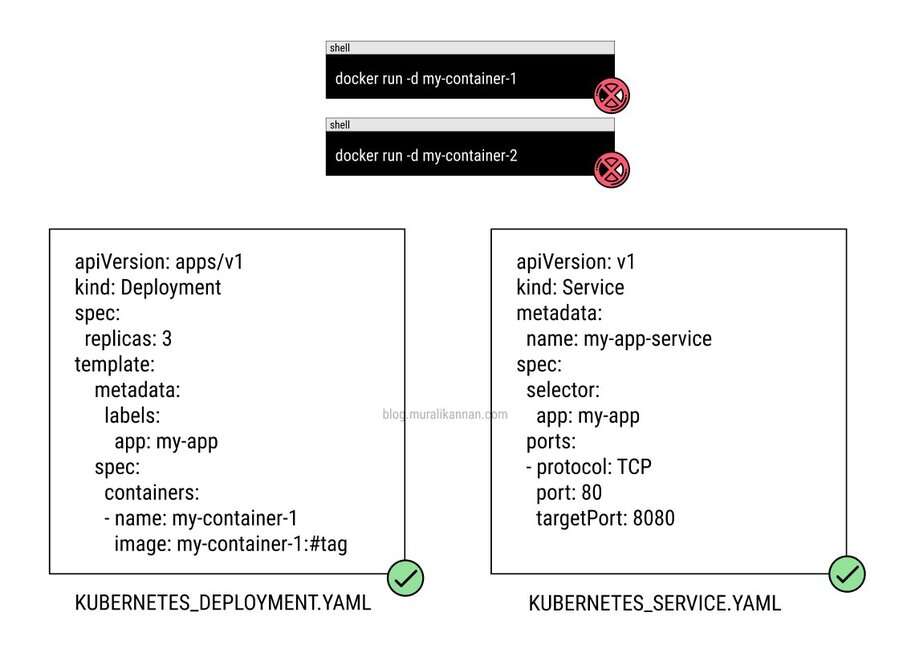

4. MANUAL CONTAINER MANAGEMENT

This involves the manual and ad-hoc management of containers without leveraging container orchestration and automation tools. Containerization offers the promise of automation, scalability, and simplified operations, but deviating from these core principles by manually starting, stopping, and configuring containers can introduce inefficiency, human error, and operational challenges. Container orchestration tools like Kubernetes are designed to streamline container management, enabling automated scaling, load balancing, self-healing, and robust resource management. Ignoring these tools and opting for manual management can undermine the benefits of containerization and result in complex, error-prone operational procedures.

THINGS TO AVOID

- Avoid manually deploying containers without the use of container orchestration tools which will lead to inefficiencies and increased operational overhead.

- Avoid manually scaling containers by adding or removing instances individually and opt for automated scaling mechanisms.

- Do not manage container configurations individually, as this can result in inconsistencies and difficulty in tracking changes.

- Avoid manually routing traffic to containers without automated load balancing, which can lead to uneven distribution of requests.

- Do not disregard the importance of automated health checks and self-healing mechanisms, as this impacts application reliability.

BEST PRACTICES

- Implement Infrastructure as Code (IaC) practices to define and manage container deployments in a version-controlled automated manner.

- Integrate containers into your CI/CD pipeline to automate the building, testing, and deployment of containerized applications.

- Adhere to immutable infrastructure principles, treating containers as disposable entities that are replaced rather than modified.

- Use auto-scaling features provided by orchestration tools to automatically adjust the number of containers based on demand.

- Use automated load balancing and traffic routing solutions to efficiently distribute requests across container instances.

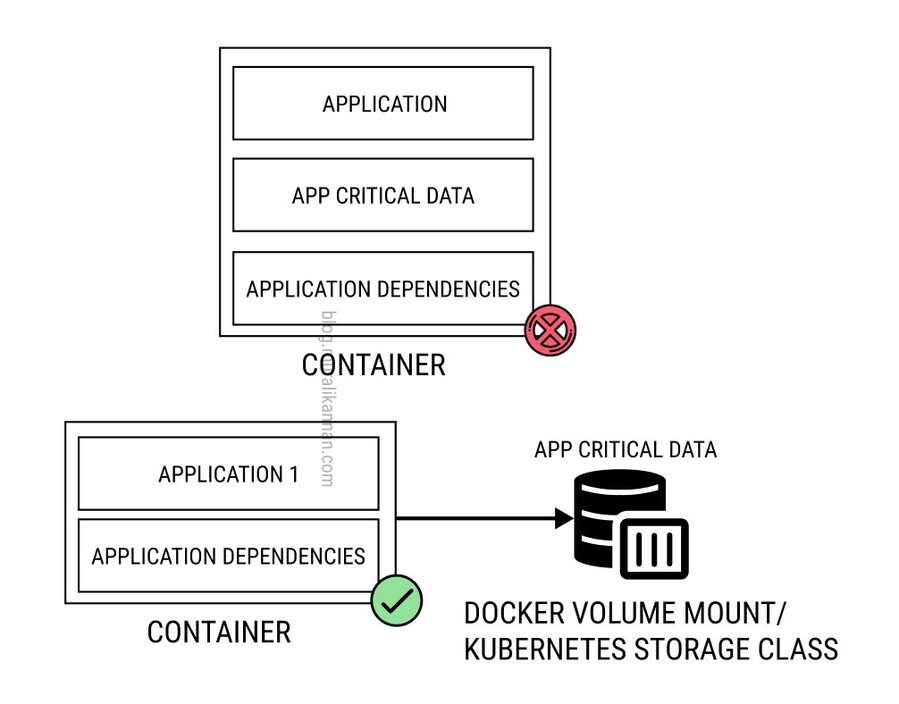

5. STATEFUL CONTAINERS

Containers are inherently designed to be stateless and ephemeral.They should not store critical data or state information internally. This approach can lead to operational complexities, increased risk of data loss, and reduced portability as containers become tightly coupled to specific data storage solutions and wil behave like traditional stateful systems such as VMs.In a containerized environment, it’s essential to decouple state from containers and employ external, stateful data services such as databases, object storage, or network-attached storage for managing persistent data.By doing so, you can fully embrace the principles of containerization, maintaining agility, scalability, and portability while safeguarding your critical data.

THINGS TO AVOID

- Avoid storing long-term, persistent data directly within containers, as it can lead to data loss and scalability issues.

- Avoid limiting the scalability of your application by tying stateful data to containers and opt for more scalable storage solutions.

- Neglecting to address data persistence challenges can result in data loss when containers are recreated or scaled.

- Avoid complicating failover procedures when containers encounter issues, which can be challenging when stateful data is involved.

- Refrain from relying solely on container backups for long-term data retention and implement dedicated data backup and recovery solutions.

BEST PRACTICES

- When running stateful applications in containers, employ volume mounting, including Docker volumes or Kubernetes storage class for data separation and resilience.

- Employ external data storage solutions or databases for managing long-term stateful data instead of storing it directly within containers.

- Utilize container orchestration platforms like Kubernetes to manage stateful services and enable features like stateful sets and persistent volumes.

- Implement data replication and backup strategies to ensure data durability and recoverability even in the event of container failures.

- Plan for horizontal scalability by allowing stateful services to dynamically scale across multiple containers or pods.

- Set up regular data backups, snapshots, and recovery processes to safeguard stateful data and ensure business continuity.

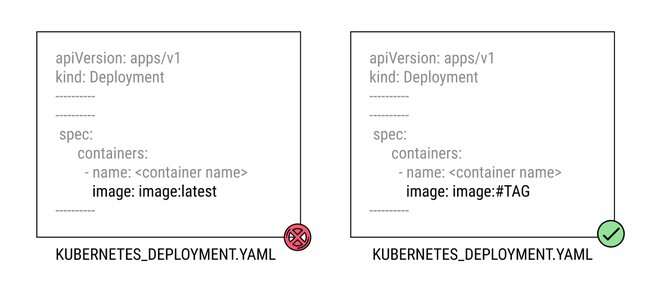

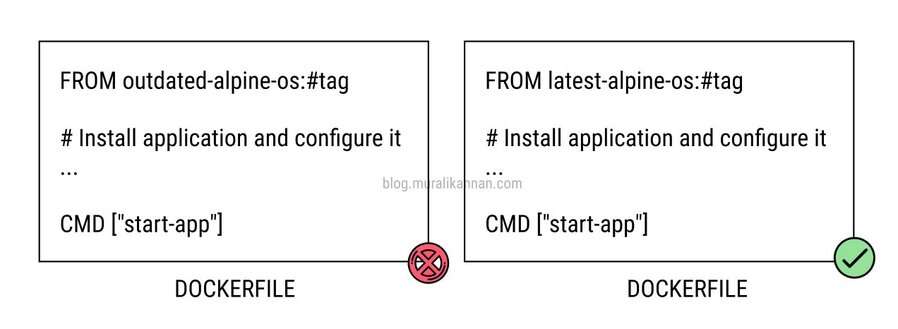

6. Using ‘Latest’ TAG

This may sound little witty but When “latest” tag is used to refer images which are not built or controlled by you it may not refer to the latest stable version but instead points to the most recent image pushed to the registry. This practice can lead to unintended consequences, such as untested or incompatible updates being deployed in production. Relying on specific version tags for container images ensures reproducibility, consistent deployments, and greater control over the software environment, reducing the chances of unexpected issues arising due to the hasty adoption of the “latest” image.

THINGS TO AVOID

- Avoid using the “latest” tag for production containers, as it can lead to unpredictable and potentially unstable updates.

- Do not deploy containers without ensuring that the “latest” image is fully compatible with your application, dependencies, and environment.

- Refrain from not specifying version tags, which can make it challenging to track and reproduce specific software configurations.

- Do not overlook the importance of reproducibility, which is essential for maintaining consistent and reliable deployments.

- Avoid making rollbacks more challenging by not using specific version tags. If an issue arises, it can be challenging to revert to a known working state.

BEST PRACTICES

- Establish registry policies that encourage the use of version tags and discourage the use of “latest” tags.

- Always use specific version tags for your container images, ensuring you have complete control over what version is deployed.

- Adhere to semantic versioning (SemVer) for version tags, making it clear when changes are backward-compatible or breaking.

- Implement an image promotion workflow that ensures images are thoroughly tested in lower environments before deploying in production while also adhering to a “build once, deploy many” paradigm.

- Clearly document and communicate the use of version tags and their meanings within your development and operations teams.

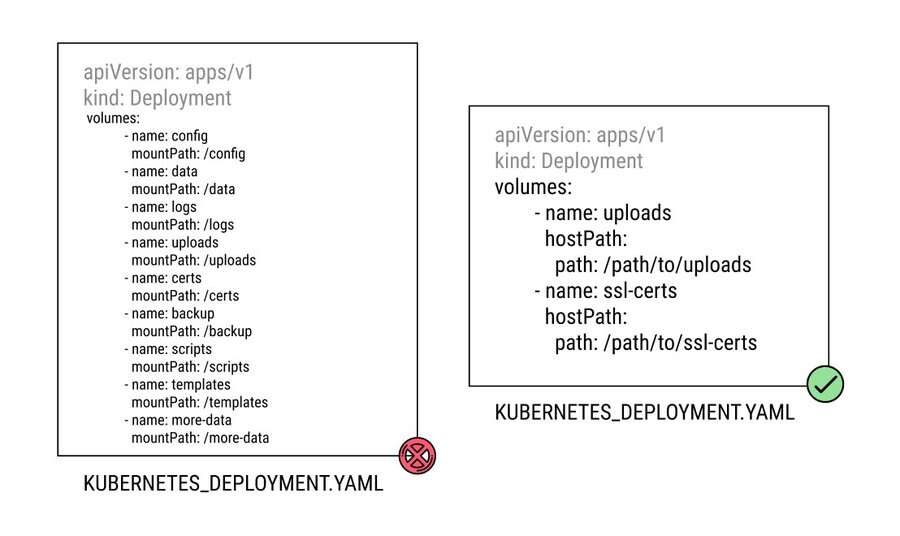

7. Overusing Volume Mounts

While volume mounts are valuable for providing access to persistent data, relying on them excessively can hinder the encapsulation and isolation benefits of containerization. Overusing volume mounts can lead to unwieldy, hard-to-maintain configurations, making it challenging to reproduce deployments consistently across different environments. To mitigate this problem it’s crucial to strike a balance by considering alternatives like external storage solutions, data orchestration, and data persistence mechanisms within container images, ensuring that volume mounts are used judiciously and purposefully.

THINGS TO AVOID

- Avoid mounting large amounts of data or file systems directly into containers, as it can hinder the portability and isolation of containers.

- Avoid allowing the number of volume mounts to grow uncontrollably within containers, leading to operational and deployment challenges.

- Do not overuse volume mounts for storing large datasets or application code, as this can lead to unwieldy container setups.

- Avoid tightly coupling containers with their data through excessive volume mounts, making it difficult to update or scale components independently.

- Avoid excessive data duplication within volume mounts to prevent inefficient resource usage and increased storage demands. Neglecting this practice can lead to container inconsistencies across various environments due to external data dependencies.

BEST PRACTICES

- Separate application code and data, placing only necessary data in volume mounts, while keeping the application itself within the container.

- Utilize external data storage solutions or databases for persistent data that doesn’t need to be directly mounted into containers.

- Design containers with configurable data paths to allow flexibility in specifying which data should be mounted, enabling easier reuse and deployment across environments.

- Optimize the use of volume mounts by regularly reviewing and pruning unnecessary data and ensuring that only essential data is included.

- Optimize data usage by avoiding unnecessary data duplication or large volumes of data directly mounted within containers.

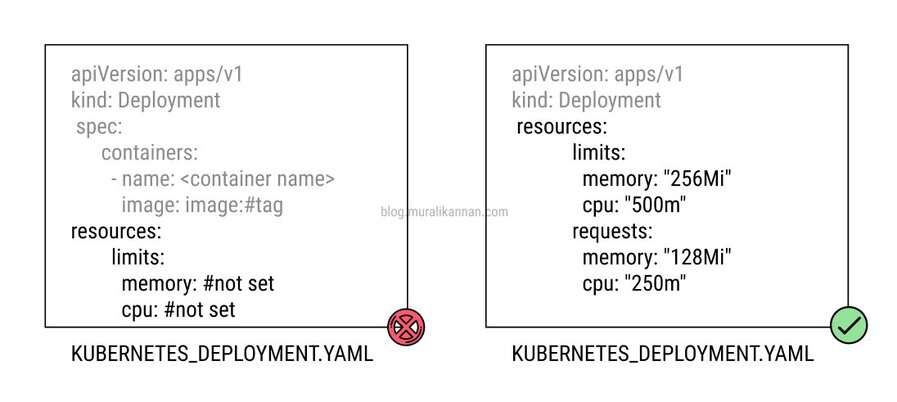

8. Not Setting Resource Limits

Containers are incredibly efficient but can be resource-hungry when left unchecked. Failing to define limits on CPU and memory usage can lead to resource contention, potentially causing poor performance, instability, and service disruptions. To mitigate this problem it’s crucial to set appropriate resource limits for each container, ensuring that applications run smoothly without overburdening the host system. Resource management is a fundamental aspect of container orchestration, and neglecting it can undermine the efficiency and predictability that containerization offers.

THINGS TO AVOID

- Avoid allowing containers to consume unlimited CPU and memory resources, which can lead to resource contention and performance degradation.

- Refrain from not setting resource limits, potentially starving other containers on the same host of essential resources.

- Failing to set resource limits may result in inefficient resource usage, as containers may consume more than they actually need.

- Neglecting resource constraints can hinder scalability, as unbounded containers may lead to imbalanced resource allocation when scaling.

- Avoid containers “hoarding” resources, which can negatively impact the overall system and fairness among applications.

BEST PRACTICES

- Define resource limits, including CPU and memory, for each container based on its specific requirements.

- Conduct load testing to determine appropriate resource limits for each container, ensuring they can handle peak workloads without over consumption.

- Plan scaling strategies that take into account the set resource limits, ensuring consistent and predictable behavior when scaling containers.

- Employ Kubernetes Quality of Service (QoS) classes to prioritize resource allocation for critical containers while constraining less critical ones.

- Periodically review and adjust resource limits as the application’s requirements change or when performance issues are identified.

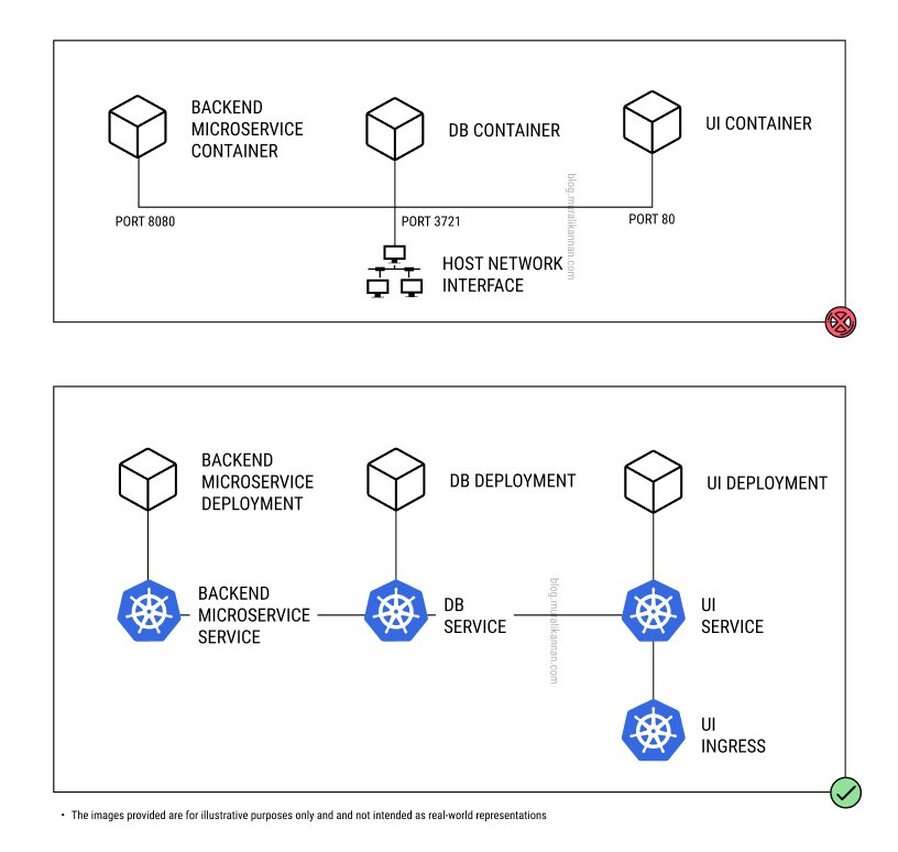

9.IGNORING NETWORK CONSIDERATIONS

Ignoring Networking Considerations is a container anti-pattern that occurs when developers do not take into account networking considerations when designing and deploying containerized applications. This can lead to a number of problems, including Performance issues,Security vulnerabilities, Compliance issue etc.

THINGS TO AVOID

- Avoid treating all containers as part of a flat network without proper segmentation, increasing security risks.

- Do not ignore the need for service discovery mechanisms that enable containers to locate and communicate with each other.

- Avoid neglecting load balancing, which distributes network traffic among containers to ensure even workloads.

- Avoid statically assigning IP addresses to containers, as it hinders the flexibility and scalability of container deployments.

- Avoid not using a container orchestration platform, not isolating containers, not using strong authentication, and not monitoring and auditing networking.

- Refrain from allowing unsecured communication between containers, leaving sensitive data vulnerable to interception.

BEST PRACTICES

- Implement network segmentation to separate containers based on their role and sensitivity level.

- Implement service discovery mechanisms such as Docker’s built-in DNS-based service discovery or Kubernetes’ service discovery or service mesh to enable containers to locate and communicate with each other.

- Use load balancers such as Kubernetes Service component to evenly distribute traffic across containers, preventing network congestion.

- Leverage container networking tools like Docker’s built-in networking, Kubernetes network policies, or third-party solutions for better network control.

- Conduct regular security audits and vulnerability assessments to identify and address potential network security weaknesses.

10. NEGLECTING SECURITY UPDATES

Containers are not immune to vulnerabilities, and failing to regularly update base images or address known security issues can expose applications to serious risks. This anti-pattern poses a direct threat to data integrity, operational stability, and the overall security of the containerized environment. It is imperative to prioritize security by actively monitoring, scanning, and updating container images and configurations to protect against potential exploits and vulnerabilities.

THINGS TO AVOID

- Avoid neglecting to apply updates for both the container image and its underlying operating system, which can leave known vulnerabilities unpatched.

- Refrain from using outdated or deprecated container base images that are no longer supported or maintained.

- Do not hardcode sensitive information like credentials or API keys within container images, as this can lead to security breaches if the image is compromised.

- Neglecting regular security scanning for container images can leave you unaware of potential vulnerabilities.

- Avoid failing to implement real-time monitoring and logging, which are crucial for detecting and responding to security incidents.

- Avoid not regularly rotating keys, passwords, and secrets, which increases the risk of unauthorized access if credentials are exposed.

BEST PRACTICES

- Ensure timely updates of both container images and their underlying operating systems to patch known vulnerabilities.

- Use digital signatures and image signing to verify the integrity of container images and detect unauthorized modifications.

- Follow the principle of least privilege, granting containers only the necessary permissions and access required for their tasks.

- Use secure, external secrets management systems to store sensitive information, ensuring that secrets are never hardcoded within container images.

- Implement automated security scanning for images, continuous monitoring and alerting for running containers to identify potential security threats and incidents in your containerized environment.

- Establish role-based access control (RBAC) for container orchestration systems, limiting access to authorized personnel.

- Ensure that your team is well-trained in security best practices, including secure coding, and that security awareness is a top priority.

CONCLUSION

Beyond avoiding these container anti patterns embrace common deployment best practices such as Automated deployments, Version Control, Immutable Infrastructure, Environment Isolation, Scaling Strategies, Security Measures, Rollback Plans, Monitoring and Logging & Documentation.

Citations:

https://opencontainers.org/about/overview/

https://landscape.cncf.io/guide#provisioning--automation-configuration

https://landscape.cncf.io/guide#provisioning--security-compliance

https://landscape.cncf.io/guide#provisioning--summary-provisioning

https://landscape.cncf.io/guide#orchestration-management

https://landscape.cncf.io/guide#orchestration-management--service-mesh

https://docs.docker.com/develop/develop-images/dockerfile_best-practices/

https://docs.docker.com/develop/develop-images/guidelines/

https://docs.docker.com/develop/security-best-practices/

https://kubernetes.io/docs/concepts/configuration/overview/

Image Courtesy: Created by Me using Adobe Firefly AI